Audio and speech

Explore audio and speech features in the OpenAI API.

Copy page

The OpenAI API provides a range of audio capabilities. If you know what you want to build, find your use case below to get started. If you're not sure where to start, read this page as an overview.

A tour of audio use cases

LLMs can process audio by using sound as input, creating sound as output, or both. OpenAI has several API endpoints that help you build audio applications or voice agents.

Voice agents

Voice agents understand audio to handle tasks and respond back in natural language. There are two main ways to approach voice agents: either with speech-to-speech models and the Realtime API, or by chaining together a speech-to-text model, a text language model to process the request, and a text-to-speech model to respond. Speech-to-speech is lower latency and more natural, but chaining together a voice agent is a reliable way to extend a text-based agent into a voice agent.

Streaming audio

Process audio in real time to build voice agents and other low-latency applications, including transcription use cases. You can stream audio in and out of a model with the Realtime API. Our advanced speech models provide automatic speech recognition for improved accuracy, low-latency interactions, and multilingual support.

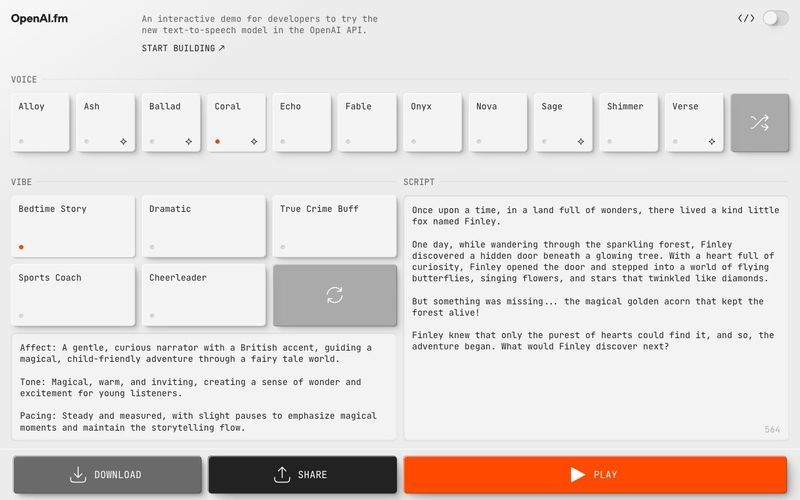

Text to speech

For turning text into speech, use the Audio API audio/speech endpoint. Models compatible with this endpoint are gpt-4o-mini-tts, tts-1, and tts-1-hd. With gpt-4o-mini-tts, you can ask the model to speak a certain way or with a certain tone of voice.

Speech to text

For speech to text, use the Audio API audio/transcriptions endpoint. Models compatible with this endpoint are gpt-4o-transcribe, gpt-4o-mini-transcribe, and whisper-1. With streaming, you can continuously pass in audio and get a continuous stream of text back.

The OpenAI API offers powerful audio and speech capabilities, allowing developers to integrate AI tools into various applications. AI models can process and generate audio, enabling functionalities such as voice agents, real-time streaming, text-to-speech, and speech-to-text conversions.

Voice agents can be built using speech-to-speech AI models or by combining multiple AI tools like speech recognition and language processing. The Realtime API supports streaming audio, ensuring low-latency interactions. Additionally, AI models like gpt-4o-mini-tts and whisper-1 enhance speech synthesis and transcription, making AI-driven audio applications more natural and efficient.